Technology Platform

Duckietown is a modular robotics and AI ecosystem with tightly integrated components designed to provide joyful learning experiences.

The Duckietown platform offers robotics and AI learning experiences through the integration of:

Duckietown is modular, customizable and state-of-the-art. It is designed to teach, learn, and do research: from exploring the fundamentals of computer science and automation to pushing the boundaries of knowledge.

To date, Duckietown has been used by learners as young as 14!

Hardware

Hardware is the most tangible part of Duckietown: a robotic ecosystems where fleets of autonomous vehicles (Duckiebots) interact with each other and with the model urban environment they operate within (Duckietown).

The ecosystem has been originally designed to provide an answer to the question: what is the least hardware we need for deploying single- and multi-robot advanced autonomy solutions?

The Duckiebot is a minimal autonomy platform that allows to investigate complex behaviors, learn about real world challenges, and do research.

The main onboard sensor is a front facing camera, and actuators include two DC motors (for moving in differential drive configuration) and RGB addressable LEDs for signaling to other Duckiebots and shedding light on the road. Additional sensors such as IMU, time-of-flight and wheel encoders are available on the newer Duckiebots.

Duckiebots are fully autonomous (the platform is completely decentralized) and all decision making is done onboard, thanks to the power of Raspberry Pi and NVIDIA Jetson Nano boards – proper credit card sized computers. The onboard battery offers hours of autonomy and in the most recent models advanced diagnostics as well.

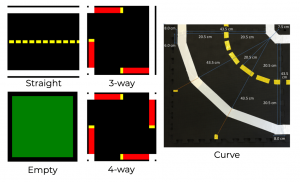

Duckietowns are structured and modular environments built on two layers: road and signal, to offer a repeatable but flexible driving experience, without fixed maps.

The road layer is defined by five segment types: straight, curve, 3-, 4-way intersection and empty tiles. Every segment is build on interconnectable tiles which can be rearranged to produce any number of city topographies while maintaining rigorous appearance specifications that guarantee the functionality of the robots.

The signal layer in Duckietown is made of traffic signs and road infrastructure.

The signs show both easily machine-readable markers (April Tags) and actual human-readable representations, to provide scalable complexity in perception. Signs enable Duckiebots to localize on the map, interpret the type and orientation of intersections, amongst other uses.

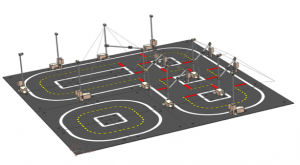

The road infrastructure is made of traffic lights and watchtowers, which are proper robots themselves. At hardware level, traffic lights are Duckiebots without wheels, and watchtowers are traffic lights without LEDs.

Although immobile, the road infrastructure enables every Duckietown to become itself a robot: it can sense, think and interact with the environment.

A Duckietown instrumented with a sufficient number of watchtowers can be transformed in a Duckietown Autolab: an accessible, reproducible setup for performance benchmarking of behaviors of fleets of self-driving cars. An Autolab can be programmed to localize robots and communicate with them in real time.

Duckiebot

-

Sensing: Camera, Encoders, IMU, Time of Flight -

Computation: Raspberry Pi, Jetson Nano -

Actuation: DC motors, LEDs -

Memory: 32GB, class 10 -

Power: 5V, 10Ah

Duckiedrone

-

Sensing: Time of Flight, Camera, IMU -

Computation: Raspberry Pi -

Actuation: DC motors, LEDs -

Memory: 32GB, class 10

Duckietown

-

Modularity: assemble and combine fundamental building blocks -

Structure: appearance specifications (colors, geometries) guarantee functionality -

Smart City: road and signal layers can be augmented with a network of smart traffic lights and watchtowers to create a real city-robot.

Software

Duckietown is programmed to be scalable in terms of difficulty level, so that it can adapt to the user’s skill level: from zero to scientist level.

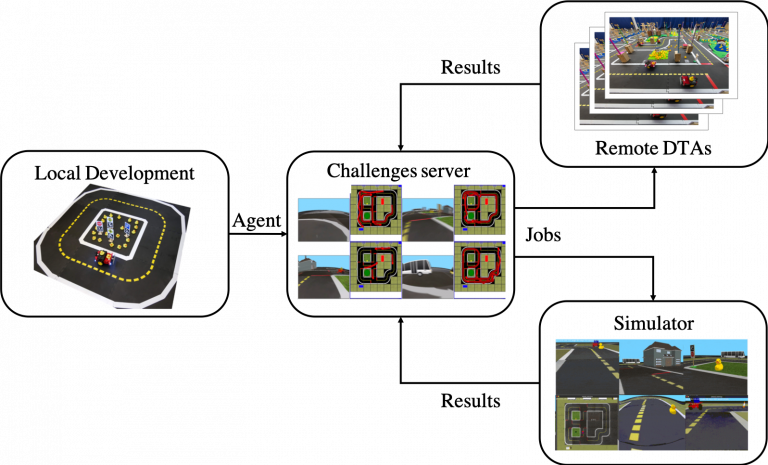

The software architecture in Duckietown allows users to develop on real hardware and simulation and evaluate their agents locally or in remote, on the cloud and on real hardware.

The Duckiebot and Duckietowns can be used via terminal (for experts) or a web duckie-dashboard GUI (for convenience).

The various robotics ecosystem functionalities offered in the base implementation are encapsulated in Docker containers, which include ROS (Robotic Operating System) and Python code.

Docker ensures code reproducibility: whatever “works” at some point in time will continues to work forever as all dependencies are included in the specific application container. In addition, Docker eases modularity, allowing to substitute individual functional blocks without encountering compatibility problems.

Communication between functionalities (perception, planning, high- and low-level control, etc.) is achieved through ROS, a well-known open source middleware for robotics.

Duckietown uses mostly Python, although ROS supports different languages too.

ROS nodes running inside Docker containers rely on a homemade operating system (duckie-OS) that provides an additional layer of abstraction. This step allows running complex sequences with single lines of code. For example, with only three lines of code you can train an agent in simulation, “package” it in a Docker container and deploy it on an actual Duckiebot (real or virtual).

Algorithms

-

One code base: for all users worldwide -

Many behaviors: test different perception, control, planning, coordination and ML solutions -

Collaborative development: open source, hosted on Github. Anyone can participate in the development. -

Free autonomy stack: start learning autonomy in.. full autonomy!

Architecture

-

Linux: start with Ubuntu -

Docker: sort of like a set of virtual machines, but more efficient -

Portainer: an intuitive way to manage programs (containers) in Docker -

Duckietown OS: an extra layer of abstraction to simplify life -

ROS: The famous Robotic Operating System manages real-time communications between various components of the software architecture -

Python : although other languages are supported, all our code is written in Python

Gym-Duckietown

-

Collaborative development: the SW in Duckietown is open source, hosted on Github. Anyone can participate in the development. -

Free: enough said -

Physically realistic -

Portability: run the same agents on the physical Duckiebots -

ML: built for training and testing AI algorithms

The Duckietown Simulator

Duckietown includes a simulator, Gym-Duckietown, written entirely in Python/OpenGL (Pyglet). The simulator is designed to be physically realistic and easily compatible with the real world, meaning that algorithms developed in the simulation can be ported to physical Duckiebots with a simple click.

In the simulator you can place agents (Duckiebots) in simulated cities: closed circuits of streets, intersections, curves, obstacles, pedestrians (ducks) and other Duckiebots. It can get pretty chaotic!

Gym-Duckietown is fast, open-source, and incredibly customizable. Although the simulator was initially designed to test algorithms for keeping vehicles in the driving lane, it has now become a fully functional urban driving simulator suitable for training and testing machine learning, reinforcement learning, imitation learning, and, of course, more traditional robotics algorithms.

With Gym-Duckietown you can explore a range of behaviors: from simple lane following to complete urban navigation with dynamic obstacles. In addition, the simulator comes with a number of features and tools that allow you to bring algorithms developed in simulation quickly to the physical robot, including advanced domain randomization capabilities, accurate physics at the level of dynamics and perception (and, most importantly: ducks waddling around).

Experimental datasets

As a complement to the simulation environment and standardized hardware, we provide a database of Duckiebot camera footage with associated technical data (camera calibrations, motor commands) that is continuously updated.

This amount of data is useful both for training certain types of machine learning algorithms (learning by imitation) and for developing perception algorithms that work in the real world.

Given the standardization of the hardware and its international distribution, this database contains information about a multitude of different environmental conditions (light, road surfaces, backgrounds, …) and experimental imperfections, which is what makes robotics fun!

The logs

-

Camera calibration: extrinsic and intrinsic for each Duckiebot -

Video: 640×480 -

Motor commands: time-stamped! -

Richness of data : tens of hours, from hundreds of Duckiebots, growing steadily

(hidden) The logs

-

Executive: straightforward instructions for hands-on learning experiences -

Aligned: mirroring the provided theory, activities and exercises -

: time-stamped! -

Richness of data : tens of hours, from hundreds of robot, growing steadily

(hidden) Operation Manuals

To accompany Duckietown users in this adventure, we provide detailed step-by-step instructions for all steps of the learning process: from assembling a box of parts to controlling a fleet of self-driving cars roaming around in a smart city.

The spirit of the Duckietown operation manuals, which can be found in the Duckumentation, is to provide executive hands-on directives to get specific things to work. We include graphics, preliminary competence and expected learning outcomes for each section, as well as troubleshooting sections and demo videos when applicable.

Learning materials

The original subtitle of the Duckietown project was:

“From a box of components, to a fleet of self-driving cars in just 3427 steps, with hiding anything.”

Since then, the steps have become many more but the spirit has remained the same. In Duckietown, all the information to use, explore and develop the platform is made available on our online library (the “Duckuments”, or “Duckiedocs”).

The documentation provides instructions, operating manuals, theoretical preliminaries, links to descriptions of the codes used, and “demos” of fundamental behaviors, at the level of a single robot or fleet.

The documentation is open source, collaborative (anyone can integrate contributions, which are moderated to ensure quality) and free.

Duckumentation

-

Comprehensive: from linear algebra to the state of the art in ML -

Useful: from theory, to algorithms, to deployment -

Collaborative: anyone can contribute!